学习数据库的最好方法,是从官方文档开始看起。mongodb的官方文档在这里,但是也有中文翻译的版本,可见这里(中文翻译貌似还没完全翻译完,还有不少段落是英文的。)。

mongodb目前最新的版本是3.4版本。注:3.4版本不在支持x86 32位的系统。

(1)安装:

(1.1) 准备3台机器,分别是mongodb1,mongodb2,mongodb3。在3台机器上,分别:

创建yum的repos的文件,以便后续进行yum安装:

|

1 2 3 4 5 6 7 |

vi /etc/yum.repos.d/mongodb-org-3.4.repo [mongodb-org-3.4] name=MongoDB Repository baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/3.4/x86_64/ gpgcheck=1 enabled=1 gpgkey=https://www.mongodb.org/static/pgp/server-3.4.asc |

(1.2)yum安装

|

1 |

sudo yum install -y mongodb-org |

注,安装的时候可能会报错:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

[root@mongodb2 mongodb]# sudo yum install -y mongodb-org Loaded plugins: refresh-packagekit, security Existing lock /var/run/yum.pid: another copy is running as pid 2389. Another app is currently holding the yum lock; waiting for it to exit... The other application is: PackageKit Memory : 151 M RSS (461 MB VSZ) Started: Sat Feb 18 23:48:39 2017 - 42:48 ago State : Sleeping, pid: 2389 Another app is currently holding the yum lock; waiting for it to exit... The other application is: PackageKit Memory : 151 M RSS (461 MB VSZ) Started: Sat Feb 18 23:48:39 2017 - 42:50 ago State : Sleeping, pid: 2389 ^C Exiting on user cancel. |

kill掉hold住yum lock的进程即可:

|

1 |

[root@mongodb2 mongodb]# kill -9 2389 |

分别在3台机器:

建立目录如下:(如果没有tree命令,可以yum -y install tree下载。)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

[root@mysqlfb-01-CentOS67-CW-17F u01]# pwd /u01 [root@mysqlfb-01-CentOS67-CW-17F u01]# tree . └── mongodbtest ├── config │?? ├── data │?? └── log ├── mongos │?? └── log ├── shard1 │?? ├── data │?? └── log ├── shard2 │?? ├── data │?? └── log └── shard3 ├── data └── log |

因为mongos是不存储数据的,所以mongos不需要data目录。

端口设定:

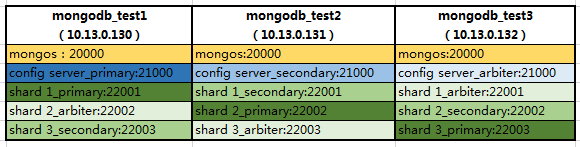

mongos为 20000, config server 为 21000, shard1为 22001 , shard2为22002, shard3为22003.

(一)config server 配置:

1. 在每一台服务器分别启动配置服务器config server

|

1 |

mongod --configsvr --replSet cfgReplSet --dbpath /u01/mongodbtest/config/data --port 21000 --logpath /u01/mongodbtest/config/log/config.log --fork |

|

1 2 3 4 5 |

[root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# mongod --configsvr --replSet cfgReplSet --dbpath /u01/mongodbtest/config/data --port 21000 --logpath /u01/mongodbtest/config/log/config.log --fork about to fork child process, waiting until server is ready for connections. forked process: 15190 child process started successfully, parent exiting [root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# |

注意:–replSet cfgReplSet这个参数是mongodb 3.4之后的要求,因为mongodb3.4之后,要求config server也做成副本集

2.配置config server为replica set。

连接到任意一台config server:

|

1 |

mongo --host 10.13.0.130 --port 21000 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

[root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# mongo --host 10.13.0.130 --port 21000 MongoDB shell version v3.4.2 connecting to: mongodb://10.13.0.130:21000/ MongoDB server version: 3.4.2 Server has startup warnings: 2017-02-20T18:01:05.528+0800 I STORAGE [initandlisten] 2017-02-20T18:01:05.528+0800 I STORAGE [initandlisten] ** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine 2017-02-20T18:01:05.528+0800 I STORAGE [initandlisten] ** See http://dochub.mongodb.org/core/prodnotes-filesystem 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended. 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] > |

3.创建副本集:

在刚才连上的那台config server:

|

1 |

rs.initiate({_id:"cfgReplSet",configsvr:true,members:[{_id:0,host:"10.13.0.130:21000"},{_id:1,host:"10.13.0.131:21000"},{_id:2,host:"10.13.0.132:21000"}]}) |

|

1 2 3 4 |

2017-02-20T18:01:05.603+0800 I CONTROL [initandlisten] > rs.initiate({_id:"cfgReplSet",configsvr:true,members:[{_id:0,host:"10.13.0.130:21000"},{_id:1,host:"10.13.0.131:21000"},{_id:2,horrsrs.rs.irs.inrs.inirs.initrs.initirs.initiars.initiatrs.initiaters.initiate(rs.initiate({rs.initiate({_rs.initiate({_irs.initiate({_idrs.initiate({_id:rs.initiate({_id:"rs.initiate({_id:"crs.initiate({_id:"cfrs.initiate({_id:"cfgrs.initiate({_id:"cfgRrs.initiate({_id:"cfgRers.initiate({_id:"cfgReplSet",configsvr:true,members:[{_id:0,host:"10.13.0.130:21000"},{_id:1,host:"10.13.0.131:21000"},{_id:2,host:"10.13.0.132:21000"}]}) { "ok" : 1 } cfgReplSet:SECONDARY> |

(二)配置分片:

1. 在每一台服务器分别以副本集方式启动分片1

|

1 |

mongod --shardsvr --replSet shard1ReplSet --port 22001 --dbpath /u01/mongodbtest/shard1/data --logpath /u01/mongodbtest/shard1/log/shard1.log --fork --nojournal --oplogSize 10 |

|

1 2 3 4 5 |

[root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# mongod --shardsvr --replSet shard1ReplSet --port 22001 --dbpath /u01/mongodbtest/shard1/data --logpath /u01/mongodbtest/shard1/log/shard1.log --fork --nojournal --oplogSize 10 about to fork child process, waiting until server is ready for connections. forked process: 15372 child process started successfully, parent exiting [root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# |

2. 连接任意一台分片服务器

|

1 |

mongo --host 10.13.0.130 --port 22001 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

[root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# mongo --host 10.13.0.130 --port 22001 MongoDB shell version v3.4.2 connecting to: mongodb://10.13.0.130:22001/ MongoDB server version: 3.4.2 Server has startup warnings: 2017-02-20T18:30:11.763+0800 I STORAGE [initandlisten] 2017-02-20T18:30:11.763+0800 I STORAGE [initandlisten] ** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine 2017-02-20T18:30:11.763+0800 I STORAGE [initandlisten] ** See http://dochub.mongodb.org/core/prodnotes-filesystem 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended. 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] > |

3. 在那台登录的分片服务器上,创建副本集并初始化

|

1 2 3 |

use admin rs.initiate({_id:"shard1ReplSet",members:[{_id:0,host:"10.13.0.130:22001"},{_id:1,host:"10.13.0.131:22001"},{_id:2,host:"10.13.0.132:22001",arbiterOnly:true}]}) |

|

1 2 3 4 5 6 7 8 |

2017-02-20T18:30:11.860+0800 I CONTROL [initandlisten] > > use adminuse admin switched to db admin > > rs.initiate({_id:"shard1ReplSet",members:[{_id:0,host:"10.13.0.130:22001"},{_id:1,host:"10.13.0.131:22001"},{_id:2,host:"10.13.0.rrsrs.rs.irs.inrs.inirs.initrs.initirs.initiars.initiatrs.initiaters.initiate({_id:"shard1ReplSet",members:[{_id:0,host:"10.13.0.130:22001"},{_id:1,host:"10.13.0.131:22001",},{_id:2,host:"10.13.0.132:22001"}]}) { "ok" : 1 } shard1ReplSet:SECONDARY> |

4. 类似的操作shard2和shard3:

4.1 在每一台主机以副本集方式启动shard2:

|

1 |

mongod --shardsvr --replSet shard2ReplSet --port 22002 --dbpath /u01/mongodbtest/shard2/data --logpath /u01/mongodbtest/shard2/log/shard2.log --fork --nojournal --oplogSize 10 |

4.2 在每一台主机以副本集方式启动shard3:

|

1 |

mongod --shardsvr --replSet shard3ReplSet --port 22003 --dbpath /u01/mongodbtest/shard3/data --logpath /u01/mongodbtest/shard3/log/shard3.log --fork --nojournal --oplogSize 10 |

4.3 在任意一台分片服务器上登录,初始化shard2:

|

1 |

mongo --host 10.13.0.131 --port 22002 |

|

1 |

rs.initiate({_id:"shard2ReplSet",members:[{_id:0,host:"10.13.0.131:22002"},{_id:1,host:"10.13.0.132:22002"},{_id:2,host:"10.13.0.130:22002",arbiterOnly:true}]}) |

4.4 在任意一台分片服务器上登录,初始化shard3:

|

1 |

mongo --host 10.13.0.132 --port 22003 |

|

1 |

rs.initiate({_id:"shard3ReplSet",members:[{_id:0,host:"10.13.0.132:22003"},{_id:1,host:"10.13.0.130:22003"},{_id:2,host:"10.13.0.131:22003",arbiterOnly:true}]}) |

(三)在每一台服务器分别启动mongos服务器。

|

1 |

mongos --configdb cfgReplSet/10.13.0.130:21000,10.13.0.131:21000,10.13.0.132:21000 --port 20000 --logpath /u01/mongodbtest/mongos/log/mongos.log --fork |

|

1 2 3 4 5 |

[root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# mongos --configdb cfgReplSet/10.13.0.130:21000,10.13.0.131:21000,10.13.0.132:21000 --port 20000 --logpath /u01/mongodbtest/mongos/log/mongos.log --fork about to fork child process, waiting until server is ready for connections. forked process: 18094 child process started successfully, parent exiting [root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# |

再次强调,如果config server不配置replica set,还是采用mongodb 3.2的mirror模式,会报错:

|

1 2 3 4 |

[root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# mongos --configdb 10.13.0.130:21000,10.13.0.131:21000,10.13.0.132:21000 --port 20000 --logpath /u01/mongodbtest/mongos/log/mongos.log --fork FailedToParse: mirrored config server connections are not supported; for config server replica sets be sure to use the replica set connection string try 'mongos --help' for more information [root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# |

至此,mongodb的数据(分片+副本),配置服务器(config server),路由服务器(mongos)都已经配置好了。

安装之后的目录为:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 |

[root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# tree . ├── config │?? ├── data │?? │?? ├── collection-0--2075864821009270561.wt │?? │?? ├── collection-12--2075864821009270561.wt │?? │?? ├── collection-14--2075864821009270561.wt │?? │?? ├── collection-19--2075864821009270561.wt │?? │?? ├── collection-2--2075864821009270561.wt │?? │?? ├── collection-22--2075864821009270561.wt │?? │?? ├── collection-25--2075864821009270561.wt │?? │?? ├── collection-29--2075864821009270561.wt │?? │?? ├── collection-32--2075864821009270561.wt │?? │?? ├── collection-36--2075864821009270561.wt │?? │?? ├── collection-38--2075864821009270561.wt │?? │?? ├── collection-4--2075864821009270561.wt │?? │?? ├── collection-5--2075864821009270561.wt │?? │?? ├── collection-7--2075864821009270561.wt │?? │?? ├── collection-9--2075864821009270561.wt │?? │?? ├── diagnostic.data │?? │?? │?? ├── metrics.2017-02-20T10-01-06Z-00000 │?? │?? │?? └── metrics.interim │?? │?? ├── index-10--2075864821009270561.wt │?? │?? ├── index-11--2075864821009270561.wt │?? │?? ├── index-1--2075864821009270561.wt │?? │?? ├── index-13--2075864821009270561.wt │?? │?? ├── index-15--2075864821009270561.wt │?? │?? ├── index-16--2075864821009270561.wt │?? │?? ├── index-17--2075864821009270561.wt │?? │?? ├── index-18--2075864821009270561.wt │?? │?? ├── index-20--2075864821009270561.wt │?? │?? ├── index-21--2075864821009270561.wt │?? │?? ├── index-23--2075864821009270561.wt │?? │?? ├── index-24--2075864821009270561.wt │?? │?? ├── index-26--2075864821009270561.wt │?? │?? ├── index-27--2075864821009270561.wt │?? │?? ├── index-28--2075864821009270561.wt │?? │?? ├── index-30--2075864821009270561.wt │?? │?? ├── index-31--2075864821009270561.wt │?? │?? ├── index-3--2075864821009270561.wt │?? │?? ├── index-33--2075864821009270561.wt │?? │?? ├── index-34--2075864821009270561.wt │?? │?? ├── index-35--2075864821009270561.wt │?? │?? ├── index-37--2075864821009270561.wt │?? │?? ├── index-39--2075864821009270561.wt │?? │?? ├── index-6--2075864821009270561.wt │?? │?? ├── index-8--2075864821009270561.wt │?? │?? ├── journal │?? │?? │?? ├── WiredTigerLog.0000000001 │?? │?? │?? ├── WiredTigerPreplog.0000000001 │?? │?? │?? └── WiredTigerPreplog.0000000002 │?? │?? ├── _mdb_catalog.wt │?? │?? ├── mongod.lock │?? │?? ├── sizeStorer.wt │?? │?? ├── storage.bson │?? │?? ├── WiredTiger │?? │?? ├── WiredTigerLAS.wt │?? │?? ├── WiredTiger.lock │?? │?? ├── WiredTiger.turtle │?? │?? └── WiredTiger.wt │?? └── log │?? └── config.log ├── mongos │?? └── log │?? └── mongos.log ├── shard1 │?? ├── data │?? │?? ├── collection-0-3233402335532130874.wt │?? │?? ├── collection-2-3233402335532130874.wt │?? │?? ├── collection-4-3233402335532130874.wt │?? │?? ├── collection-5-3233402335532130874.wt │?? │?? ├── collection-7-3233402335532130874.wt │?? │?? ├── collection-9-3233402335532130874.wt │?? │?? ├── diagnostic.data │?? │?? │?? ├── metrics.2017-02-20T10-30-12Z-00000 │?? │?? │?? └── metrics.interim │?? │?? ├── index-10-3233402335532130874.wt │?? │?? ├── index-1-3233402335532130874.wt │?? │?? ├── index-3-3233402335532130874.wt │?? │?? ├── index-6-3233402335532130874.wt │?? │?? ├── index-8-3233402335532130874.wt │?? │?? ├── _mdb_catalog.wt │?? │?? ├── mongod.lock │?? │?? ├── sizeStorer.wt │?? │?? ├── storage.bson │?? │?? ├── WiredTiger │?? │?? ├── WiredTigerLAS.wt │?? │?? ├── WiredTiger.lock │?? │?? ├── WiredTiger.turtle │?? │?? └── WiredTiger.wt │?? └── log │?? └── shard1.log ├── shard2 │?? ├── data │?? │?? ├── collection-0-8872345764405008471.wt │?? │?? ├── collection-2-8872345764405008471.wt │?? │?? ├── collection-4-8872345764405008471.wt │?? │?? ├── collection-6-8872345764405008471.wt │?? │?? ├── diagnostic.data │?? │?? │?? ├── metrics.2017-02-21T08-32-29Z-00000 │?? │?? │?? └── metrics.interim │?? │?? ├── index-1-8872345764405008471.wt │?? │?? ├── index-3-8872345764405008471.wt │?? │?? ├── index-5-8872345764405008471.wt │?? │?? ├── index-7-8872345764405008471.wt │?? │?? ├── _mdb_catalog.wt │?? │?? ├── mongod.lock │?? │?? ├── sizeStorer.wt │?? │?? ├── storage.bson │?? │?? ├── WiredTiger │?? │?? ├── WiredTigerLAS.wt │?? │?? ├── WiredTiger.lock │?? │?? ├── WiredTiger.turtle │?? │?? └── WiredTiger.wt │?? └── log │?? └── shard2.log └── shard3 ├── data │?? ├── collection-0-4649094397759884044.wt │?? ├── collection-12-4649094397759884044.wt │?? ├── collection-13-4649094397759884044.wt │?? ├── collection-2-4649094397759884044.wt │?? ├── collection-4-4649094397759884044.wt │?? ├── collection-6-4649094397759884044.wt │?? ├── diagnostic.data │?? │?? ├── metrics.2017-02-21T08-51-36Z-00000 │?? │?? └── metrics.interim │?? ├── index-14-4649094397759884044.wt │?? ├── index-1-4649094397759884044.wt │?? ├── index-3-4649094397759884044.wt │?? ├── index-5-4649094397759884044.wt │?? ├── index-7-4649094397759884044.wt │?? ├── _mdb_catalog.wt │?? ├── mongod.lock │?? ├── sizeStorer.wt │?? ├── storage.bson │?? ├── WiredTiger │?? ├── WiredTigerLAS.wt │?? ├── WiredTiger.lock │?? ├── WiredTiger.turtle │?? └── WiredTiger.wt └── log └── shard3.log 19 directories, 122 files [root@mysqlfb-01-CentOS67-CW-17F mongodbtest]# |

登录路由节点后,添加分片:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

[root@mongodb2 u01]# mongo --host localhost --port 20000 MongoDB shell version v3.4.2 connecting to: mongodb://localhost:20000/ MongoDB server version: 3.4.2 Server has startup warnings: 2017-02-28T23:58:39.358+0800 I CONTROL [main] 2017-02-28T23:58:39.358+0800 I CONTROL [main] ** WARNING: Access control is not enabled for the database. 2017-02-28T23:58:39.358+0800 I CONTROL [main] ** Read and write access to data and configuration is unrestricted. 2017-02-28T23:58:39.358+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended. 2017-02-28T23:58:39.358+0800 I CONTROL [main] mongos> mongos> sh.addShard("shard1ReplSet/10.13.0.130:22001"); { "shardAdded" : "shard1ReplSet", "ok" : 1 } mongos> mongos> sh.addShard("shard2ReplSet/10.13.0.131:22002"); { "shardAdded" : "shard2ReplSet", "ok" : 1 } mongos> mongos> mongos> sh.addShard("shard3ReplSet/10.13.0.132:22003"); { "shardAdded" : "shard3ReplSet", "ok" : 1 } mongos> mongos> mongos> mongos> |

检查,显示shard状态:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

mongos> mongos> sh.status();sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("58aac2586715e0acb331e106") } shards: { "_id" : "shard1ReplSet", "host" : "shard1ReplSet/10.13.0.130:22001,10.13.0.131:22001", "state" : 1 } { "_id" : "shard2ReplSet", "host" : "shard2ReplSet/10.13.0.131:22002,10.13.0.132:22002", "state" : 1 } { "_id" : "shard3ReplSet", "host" : "shard3ReplSet/10.13.0.130:22003,10.13.0.132:22003", "state" : 1 } active mongoses: "3.4.2" : 3 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Balancer lock taken at Mon Feb 20 2017 18:18:01 GMT+0800 (CST) by ConfigServer:Balancer Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: mongos> |

检查,显示shard配置:

|

1 |

db.runCommand({listShards:1}); |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

mongos> mongos> db.runCommand({listShards:1});db.runCommand({listShards:1}); { "shards" : [ { "_id" : "shard1ReplSet", "host" : "shard1ReplSet/10.13.0.130:22001,10.13.0.131:22001", "state" : 1 }, { "_id" : "shard2ReplSet", "host" : "shard2ReplSet/10.13.0.131:22002,10.13.0.132:22002", "state" : 1 }, { "_id" : "shard3ReplSet", "host" : "shard3ReplSet/10.13.0.130:22003,10.13.0.132:22003", "state" : 1 } ], "ok" : 1 } mongos> |

mongodb的CRUD(create,select,update,delete)基本操作有:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

==> 数据操作: 插入数据:db.collection.insert 查询数据:db.collection.find() 更新数据:db.collection.update() 删除数据:db.collection.remove() ==> collection操作: 新建collection: sh.shardCollection("xxx<db_name>.yyy<collection_name>",{col1: 1, col2: 1}) 删除collection: db.yyy.drop() ==> db操作: 新建db sh.enableSharding("xxx") 删除db: use xxx db.dropDatabase(); |

我们新建一个数据库:

|

1 |

sh.enableSharding("oracleblog"); |

|

1 2 3 |

mongos> sh.enableSharding("oracleblog");sh.enableSharding("oracleblog"); { "ok" : 1 } mongos> |

建立collection,和相关字段:

|

1 |

sh.shardCollection("oracleblog.testtab",{age: 1, name: 1}) |

|

1 2 3 4 5 6 7 |

mongos> use oraclebloguse oracleblog switched to db oracleblog mongos> mongos> sh.shardCollection("oracleblog.testtab",{age: 1, name: 1})sh.shardCollection("oracleblog.testtab",{age: 1, name: 1}) { "collectionsharded" : "oracleblog.testtab", "ok" : 1 } mongos> mongos> |

检查分片信息(插入数据前):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

mongos> sh.status(); --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("58aac2586715e0acb331e106") } shards: { "_id" : "shard1ReplSet", "host" : "shard1ReplSet/10.13.0.130:22001,10.13.0.131:22001", "state" : 1 } { "_id" : "shard2ReplSet", "host" : "shard2ReplSet/10.13.0.131:22002,10.13.0.132:22002", "state" : 1 } { "_id" : "shard3ReplSet", "host" : "shard3ReplSet/10.13.0.130:22003,10.13.0.132:22003", "state" : 1 } active mongoses: "3.4.2" : 3 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Balancer lock taken at Mon Feb 20 2017 18:18:01 GMT+0800 (CST) by ConfigServer:Balancer Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 8 : Success databases: { "_id" : "oracleblog", "primary" : "shard1ReplSet", "partitioned" : true } oracleblog.testtab shard key: { "age" : 1, "name" : 1 } unique: false balancing: true chunks: shard1ReplSet 1 { "age" : { "$minKey" : 1 }, "name" : { "$minKey" : 1 } } -->> { "age" : { "$maxKey" : 1 }, "name" : { "$maxKey" : 1 } } on : shard1ReplSet Timestamp(1, 0) mongos> |

插入数据:

|

1 |

for (i=1;i<=10000;i++) db.testtab.insert({name: "user"+i, age: (i%150)}) |

|

1 2 3 |

mongos> for (i=1;i<=10000;i++) db.testtab.insert({name: "user"+i, age: (i%150)})for (i=1;i<=10000;i++) db.testtab.insert({name: "user"+i, age: (i%150)}) WriteResult({ "nInserted" : 1 }) mongos> |

检查分片信息(插入数据后):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

mongos> sh.status()sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("58aac2586715e0acb331e106") } shards: { "_id" : "shard1ReplSet", "host" : "shard1ReplSet/10.13.0.130:22001,10.13.0.131:22001", "state" : 1 } { "_id" : "shard2ReplSet", "host" : "shard2ReplSet/10.13.0.131:22002,10.13.0.132:22002", "state" : 1 } { "_id" : "shard3ReplSet", "host" : "shard3ReplSet/10.13.0.130:22003,10.13.0.132:22003", "state" : 1 } active mongoses: "3.4.2" : 3 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Balancer lock taken at Mon Feb 20 2017 18:18:01 GMT+0800 (CST) by ConfigServer:Balancer Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: 10 : Success databases: { "_id" : "oracleblog", "primary" : "shard1ReplSet", "partitioned" : true } oracleblog.testtab shard key: { "age" : 1, "name" : 1 } unique: false balancing: true chunks: shard1ReplSet 1 shard2ReplSet 1 shard3ReplSet 1 { "age" : { "$minKey" : 1 }, "name" : { "$minKey" : 1 } } -->> { "age" : 2, "name" : "user2" } on : shard2ReplSet Timestamp(2, 0) { "age" : 2, "name" : "user2" } -->> { "age" : 22, "name" : "user22" } on : shard3ReplSet Timestamp(3, 0) { "age" : 22, "name" : "user22" } -->> { "age" : { "$maxKey" : 1 }, "name" : { "$maxKey" : 1 } } on : shard1ReplSet Timestamp(3, 1) mongos> |

查询age大于130的记录:

|

1 |

db.testtab.find({age: {$gt: 130}}) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

mongos> db.testtab.find({age: {$gt: 130}})db.testtab.find({age: {$gt: 130}}) { "_id" : ObjectId("58ae5d5546c608a4e50b7f3c"), "name" : "user1031", "age" : 131 } { "_id" : ObjectId("58ae5d5546c608a4e50b7fd2"), "name" : "user1181", "age" : 131 } { "_id" : ObjectId("58ae5d5446c608a4e50b7bb8"), "name" : "user131", "age" : 131 } { "_id" : ObjectId("58ae5d5546c608a4e50b8068"), "name" : "user1331", "age" : 131 } { "_id" : ObjectId("58ae5d5546c608a4e50b80fe"), "name" : "user1481", "age" : 131 } { "_id" : ObjectId("58ae5d5546c608a4e50b8194"), "name" : "user1631", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b822a"), "name" : "user1781", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b82c0"), "name" : "user1931", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b8356"), "name" : "user2081", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b83ec"), "name" : "user2231", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b8482"), "name" : "user2381", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b8518"), "name" : "user2531", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b85ae"), "name" : "user2681", "age" : 131 } { "_id" : ObjectId("58ae5d5546c608a4e50b7c4e"), "name" : "user281", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b8644"), "name" : "user2831", "age" : 131 } { "_id" : ObjectId("58ae5d5646c608a4e50b86da"), "name" : "user2981", "age" : 131 } { "_id" : ObjectId("58ae5d5746c608a4e50b8770"), "name" : "user3131", "age" : 131 } { "_id" : ObjectId("58ae5d5746c608a4e50b8806"), "name" : "user3281", "age" : 131 } { "_id" : ObjectId("58ae5d5746c608a4e50b889c"), "name" : "user3431", "age" : 131 } { "_id" : ObjectId("58ae5d5746c608a4e50b8932"), "name" : "user3581", "age" : 131 } Type "it" for more mongos> |