之前在vmware中装了一个双节点的rac,在这里,我们给rac增加一个新节点。当前的rac情况是:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

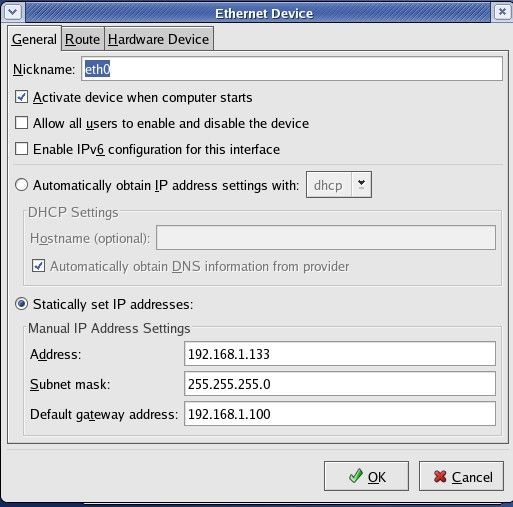

rac1: IP:192.168.1.131 Private-IP:10.10.10.31 VIP:192.168.1.31 rac2: IP:192.168.1.132 Private-IP:10.10.10.32 VIP:192.168.1.32 需要新加的rac3: rac3: IP:192.168.1.133 Private-IP:10.10.10.33 VIP:192.168.1.33 |

一、系统的准备:

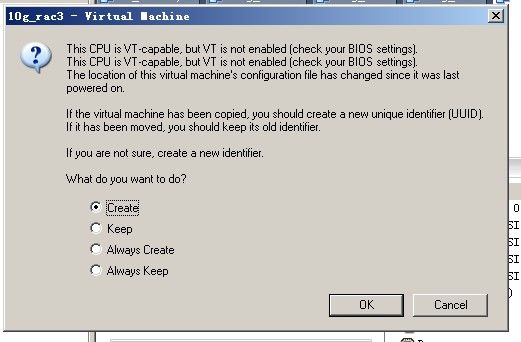

1、停止双节点rac后,复制其中一个节点。然后只启动这个节点。启动后,create UUID的时候,选择create

2、在启动的时候,create configure IP和网关可随意写,等进入系统后再修改。

3、启动后修改IP、为/etc/hosts增加节点3的信息、修改hostname为rac3,最后重新激活网卡。

4、启动rac1和rac2,并修改2个节点的/etc/hosts文件,增加上rac3的信息。3个节点的hosts文件形如:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# Do not remove the following line, or various programs # that require network functionality will fail. 127.0.0.1 localhost 192.168.1.131 rac1.mycorpdomain.com rac1 192.168.1.31 rac1-vip.mycorpdomain.com rac1-vip 10.10.10.31 rac1-priv.mycorpdomain.com rac1-priv 192.168.1.132 rac2.mycorpdomain.com rac2 192.168.1.32 rac2-vip.mycorpdomain.com rac2-vip 10.10.10.32 rac2-priv.mycorpdomain.com rac2-priv 192.168.1.133 rac3.mycorpdomain.com rac3 192.168.1.33 rac3-vip.mycorpdomain.com rac3-vip 10.10.10.33 rac3-priv.mycorpdomain.com rac3-priv # ntp server 192.168.1.189 HEJIANMIN |

其中192.168.1.189 HEJIANMIN是ntp服务器的设置,ntp服务器是我的windows本机。关于ntp服务器的架设,可以见这里。

5、修改ocfs的配置,增加第三个的节点。3个机器上的配置文件都需要修改。修改/etc/ocfs2/cluster.conf,形如:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

node: ip_port = 7777 ip_address = 192.168.1.131 number = 0 name = rac1 cluster = ocfs2 node: ip_port = 7777 ip_address = 192.168.1.132 number = 1 name = rac2 cluster = ocfs2 node: ip_port = 7777 ip_address = 192.168.1.133 number = 2 name = rac3 cluster = ocfs2 cluster: node_count =3 name = ocfs2 |

6、在rac3:

|

1 2 3 4 5 |

/etc/init.d/o2cb unload Unmounting ocfs2_dlmfs filesystem: OK Unloading module "ocfs2_dlmfs": OK Unmounting configfs filesystem: OK Unloading module "configfs": OK |

7、重新配置rac3的o2cb

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

[root@rac3 ocfs]# /etc/init.d/o2cb configure Configuring the O2CB driver. This will configure the on-boot properties of the O2CB driver. The following questions will determine whether the driver is loaded on boot. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Load O2CB driver on boot (y/n) [y]: y Cluster to start on boot (Enter "none" to clear) [ocfs2]: Specify heartbeat dead threshold (>=7) [61]: 61 Writing O2CB configuration: OK Loading module "configfs": OK Mounting configfs filesystem at /config: OK Loading module "ocfs2_nodemanager": OK Loading module "ocfs2_dlm": OK Loading module "ocfs2_dlmfs": OK Mounting ocfs2_dlmfs filesystem at /dlm: OK Starting O2CB cluster ocfs2: OK [root@rac3 ocfs]# |

8、检查rac3上的ocfs服务:

|

1 2 3 4 5 6 7 8 9 |

[root@rac3 ocfs]# /etc/init.d/o2cb status Module "configfs": Loaded Filesystem "configfs": Mounted Module "ocfs2_nodemanager": Loaded Module "ocfs2_dlm": Loaded Module "ocfs2_dlmfs": Loaded Filesystem "ocfs2_dlmfs": Mounted Checking O2CB cluster ocfs2: Online Checking O2CB heartbeat: Not active |

9、重启3个节点,使得ocfs在3个节点上生效。

其实也可以这样:

节点1,umount ; o2cb stop; start;mount

节点2,umount ; o2cb stop; start;mount

这样避免rac的对外服务全部停止。

10、配置用户信任。

在rac3上:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 |

rac3-> cd rac3-> cd .ssh rac3-> rm * rac3-> ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/export/home/oracle/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /export/home/oracle/.ssh/id_rsa. Your public key has been saved in /export/home/oracle/.ssh/id_rsa.pub. The key fingerprint is: c8:86:12:ed:96:57:33:84:4f:47:00:95:ad:25:52:dd oracle@rac3.mycorpdomain.com rac3-> ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/export/home/oracle/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /export/home/oracle/.ssh/id_dsa. Your public key has been saved in /export/home/oracle/.ssh/id_dsa.pub. The key fingerprint is: ff:9a:1d:f1:9a:40:48:ce:2c:b2:31:01:79:6e:a3:ac oracle@rac3.mycorpdomain.com rac3-> rac3-> rac3-> ll total 32 -rw------- 1 oracle oinstall 668 Jun 28 16:35 id_dsa -rw-r--r-- 1 oracle oinstall 618 Jun 28 16:35 id_dsa.pub -rw------- 1 oracle oinstall 883 Jun 28 16:35 id_rsa -rw-r--r-- 1 oracle oinstall 238 Jun 28 16:35 id_rsa.pub rac3-> rac3-> rac3-> rac3-> cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys rac3-> cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys rac3-> ll total 40 -rw-r--r-- 1 oracle oinstall 856 Jun 28 16:36 authorized_keys -rw------- 1 oracle oinstall 668 Jun 28 16:35 id_dsa -rw-r--r-- 1 oracle oinstall 618 Jun 28 16:35 id_dsa.pub -rw------- 1 oracle oinstall 883 Jun 28 16:35 id_rsa -rw-r--r-- 1 oracle oinstall 238 Jun 28 16:35 id_rsa.pub rac3-> rac3-> rac3-> ssh rac2 cat ~/.ssh/id_rsa.pub The authenticity of host 'rac2 (192.168.1.132)' can't be established. RSA key fingerprint is aa:e4:aa:8d:bc:8b:59:da:a5:f8:4f:de:b9:4b:6a:30. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac2,192.168.1.132' (RSA) to the list of known hosts. oracle@rac2's password: ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAIEApj3pcBEu8xCAzBSW9ZD1AQQTICAGJb5EkVM/HmgkvkrXZS16oQ7APk4abUNFknR93/takk61P0/zapF74R3YP0ycTLGQj6T6215Hp3yaN7vsMphn2Wn0LC4jtkEeS7bKazpxeuFwk6baHfNQ5RuTLMVs97rklF8eLjigLlj/so8= oracle@rac2.mycorpdomain.com rac3-> rac3-> rac3-> rac3-> rac3-> ssh rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys oracle@rac2's password: rac3-> rac3-> ssh rac2 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys oracle@rac2's password: rac3-> ssh rac1 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys The authenticity of host 'rac1 (192.168.1.131)' can't be established. RSA key fingerprint is aa:e4:aa:8d:bc:8b:59:da:a5:f8:4f:de:b9:4b:6a:30. Are you sure you want to continue connecting (yes/no)? ys Please type 'yes' or 'no': yes Warning: Permanently added 'rac1,192.168.1.131' (RSA) to the list of known hosts. oracle@rac1's password: rac3-> rac3-> rac3-> ssh rac1 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys oracle@rac1's password: rac3-> rac3-> rac3-> rac3-> rac3-> scp ~/.ssh/authorized_keys rac2:~/.ssh/authorized_keys oracle@rac2's password: authorized_keys 100% 2568 2.5KB/s 00:00 rac3-> rac3-> scp ~/.ssh/authorized_keys rac1:~/.ssh/authorized_keys oracle@rac1's password: authorized_keys 100% 2568 2.5KB/s 00:00 rac3-> |

并且在3个节点上运行以下语句,看是否提示需要密码。如果一次不行,请重复执行一次:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

ssh rac1 date ssh rac2 date ssh rac3 date ssh rac1-priv date ssh rac2-priv date ssh rac3-priv date ssh rac1.mycorpdomain.com date ssh rac2.mycorpdomain.com date ssh rac3.mycorpdomain.com date ssh rac1-priv.mycorpdomain.com date ssh rac2-priv.mycorpdomain.com date ssh rac3-priv.mycorpdomain.com date |

11、修改oracle用户的环境变量为devdb3。

12、由于我们只需一份操作系统,我们进行到此步骤后,可以删除/u01/app和/u01/oradata.

(注:系统的准备工作在这里已经完成,如果条件允许,在这里可以关闭vmware的3个机器,做个备份。)

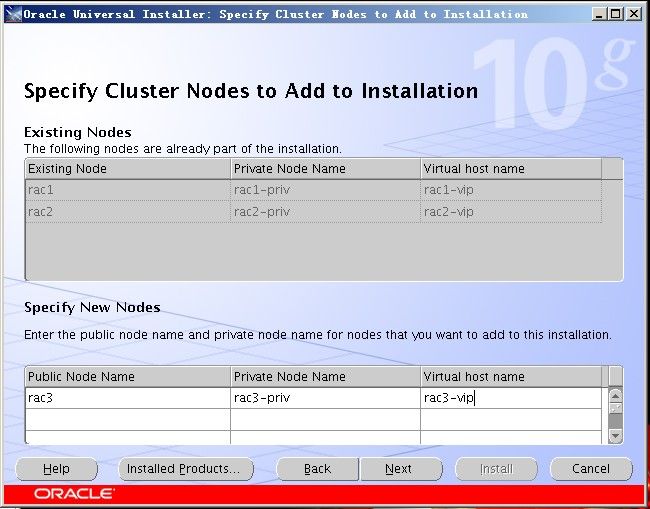

二、CRS的安装:

1、启动xmanager,在rac1上export DISPLAY之后,用oracle用户运行addnode.sh

cd /u01/app/oracle/product/10.2.0/crs_1/oui/bin

./addNode.sh

后面的操作步骤,看图说话:

这里按照提示,在rac1用root权限执行

|

1 2 3 4 5 6 7 8 9 10 11 |

[root@rac1 ~]# sh /u01/app/oracle/product/10.2.0/crs_1/install/rootaddnode.sh clscfg: EXISTING configuration version 3 detected. clscfg: version 3 is 10G Release 2. Attempting to add 1 new nodes to the configuration Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 3: rac3 rac3-priv rac3 Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. /u01/app/oracle/product/10.2.0/crs_1/bin/srvctl add nodeapps -n rac3 -A rac3-vip/255.255.255.0/eth0 -o /u01/app/oracle/product/10.2.0/crs_1 [root@rac1 ~]# |

在rac3用root执行:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

[root@rac3 10.2.0]# sh /u01/app/oracle/product/10.2.0/crs_1/root.sh WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root WARNING: directory '/u01/app/oracle/product' is not owned by root WARNING: directory '/u01/app/oracle' is not owned by root WARNING: directory '/u01/app' is not owned by root WARNING: directory '/u01' is not owned by root Checking to see if Oracle CRS stack is already configured OCR LOCATIONS = /ocfs/clusterware/ocr OCR backup directory '/u01/app/oracle/product/10.2.0/crs_1/cdata/crs' does not exist. Creating now Setting the permissions on OCR backup directory Setting up NS directories Oracle Cluster Registry configuration upgraded successfully WARNING: directory '/u01/app/oracle/product/10.2.0' is not owned by root WARNING: directory '/u01/app/oracle/product' is not owned by root WARNING: directory '/u01/app/oracle' is not owned by root WARNING: directory '/u01/app' is not owned by root WARNING: directory '/u01' is not owned by root clscfg: EXISTING configuration version 3 detected. clscfg: version 3 is 10G Release 2. assigning default hostname rac1 for node 1. assigning default hostname rac2 for node 2. Successfully accumulated necessary OCR keys. Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 1: rac1 rac1-priv rac1 node 2: rac2 rac2-priv rac2 clscfg: Arguments check out successfully. NO KEYS WERE WRITTEN. Supply -force parameter to override. -force is destructive and will destroy any previous cluster configuration. Oracle Cluster Registry for cluster has already been initialized Startup will be queued to init within 90 seconds. Adding daemons to inittab Expecting the CRS daemons to be up within 600 seconds. CSS is active on these nodes. rac1 rac2 rac3 CSS is active on all nodes. Waiting for the Oracle CRSD and EVMD to start Waiting for the Oracle CRSD and EVMD to start Oracle CRS stack installed and running under init(1M) Running vipca(silent) for configuring nodeapps IP address "rac1-vip" has already been used. Enter an unused IP address. [root@rac3 10.2.0]# |

2、执行完在rac1上的rootaddnode.sh 和rac3上的root.sh之后,在rac3上执行

$ORA_CRS_HOME/bin/racgons add_conf rac3:4948 将本地ons服务注册。

3、检查安装情况:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 |

/u01/app/oracle/product/10.2.0/crs_1/bin/cluvfy stage -post crsinst -n rac1,rac2,rac3 Performing post-checks for cluster services setup Checking node reachability... Node reachability check passed from node "rac3". Checking user equivalence... User equivalence check passed for user "oracle". Checking Cluster manager integrity... Checking CSS daemon... Daemon status check passed for "CSS daemon". Cluster manager integrity check passed. Checking cluster integrity... Cluster integrity check passed Checking OCR integrity... Checking the absence of a non-clustered configuration... All nodes free of non-clustered, local-only configurations. Uniqueness check for OCR device passed. Checking the version of OCR... OCR of correct Version "2" exists. Checking data integrity of OCR... Data integrity check for OCR passed. OCR integrity check passed. Checking CRS integrity... Checking daemon liveness... Liveness check passed for "CRS daemon". Checking daemon liveness... Liveness check passed for "CSS daemon". Checking daemon liveness... Liveness check passed for "EVM daemon". Checking CRS health... CRS health check passed. CRS integrity check passed. Checking node application existence... Checking existence of VIP node application (required) Check passed. Checking existence of ONS node application (optional) Check passed. Checking existence of GSD node application (optional) Check passed. Post-check for cluster services setup was successful. rac3-> |

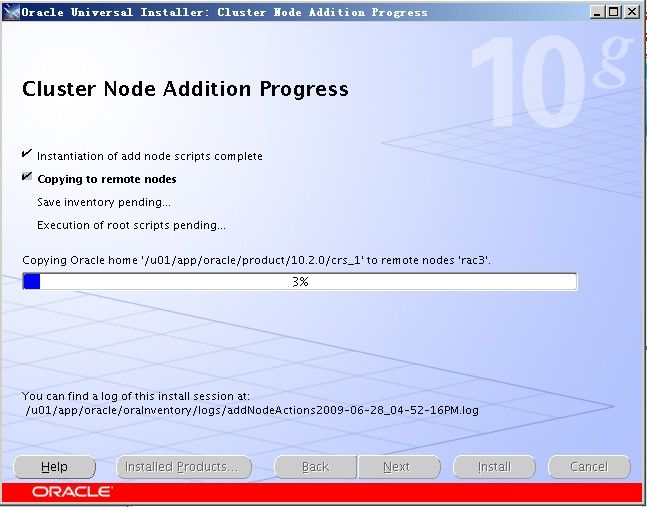

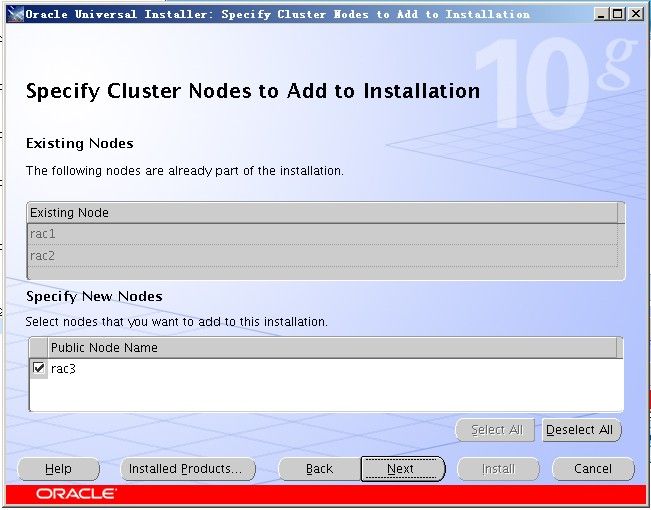

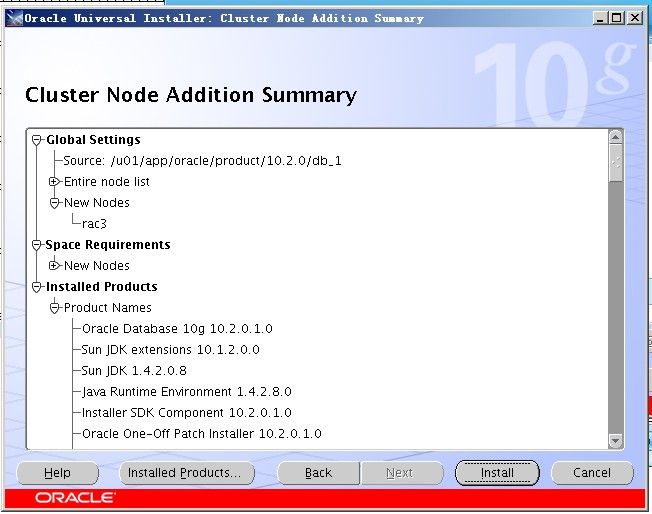

三、oracle软件的安装:

1、在rac1上用xmanager先export DISPLAY,再执行$ORACLE_HOME/oui/bin/addNode.sh

继续看图说话:)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

[root@rac3 rpm]# sh /u01/app/oracle/product/10.2.0/db_1/root.sh Running Oracle10 root.sh script... The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/10.2.0/db_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root.sh script. Now product-specific root actions will be performed. [root@rac3 rpm]# |

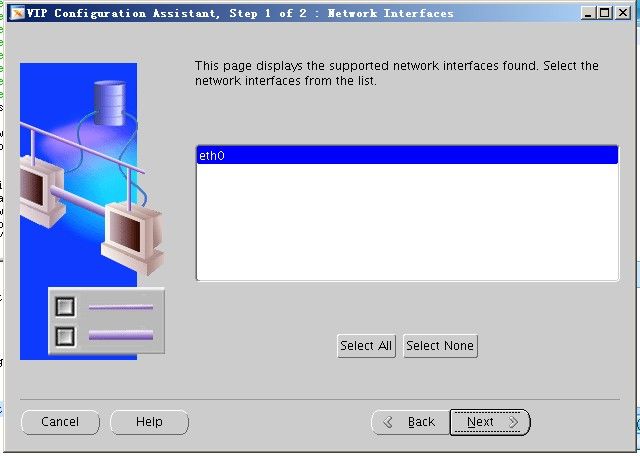

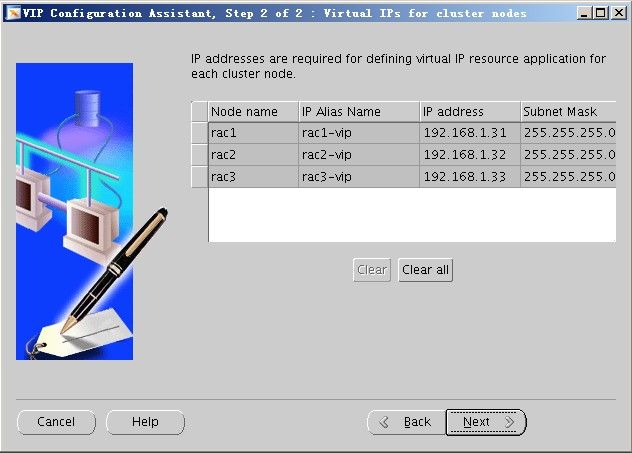

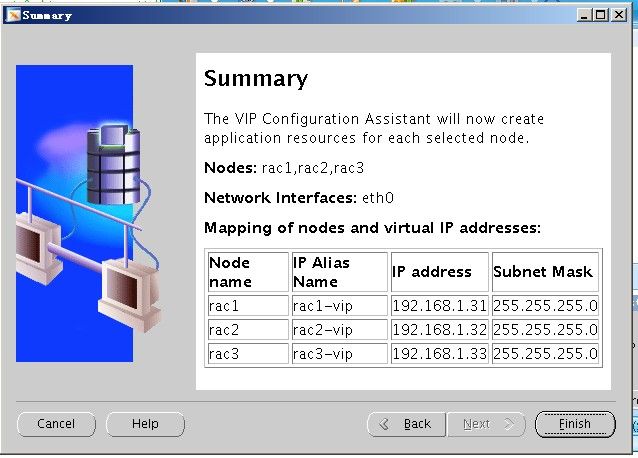

2、在rac3上,用root执行/u01/app/oracle/product/10.2.0/crs_1/bin/vipca -nodelist rac1,rac2,rac3 检查vip配置是否正确

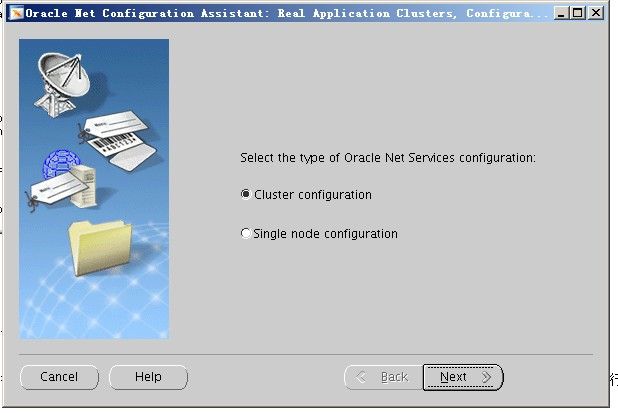

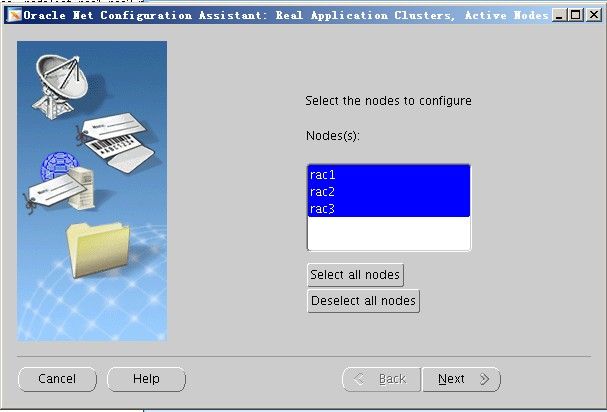

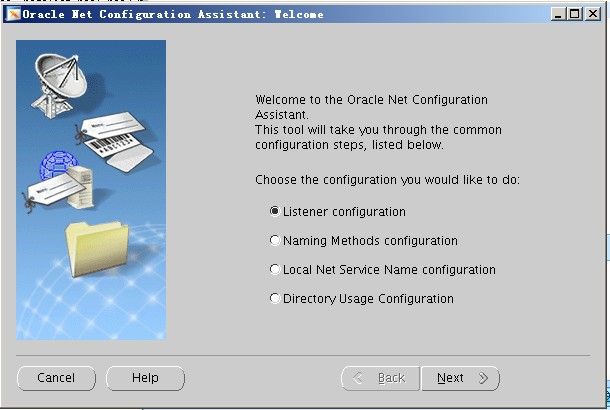

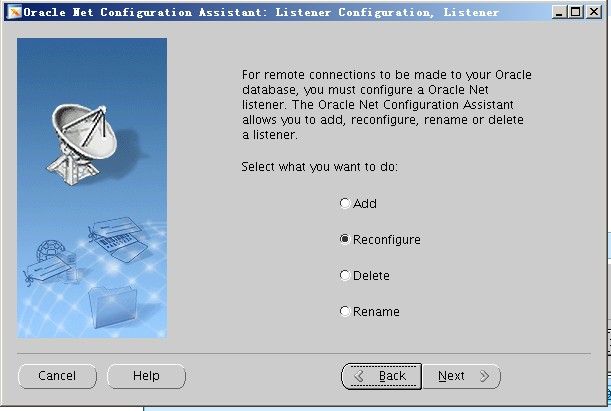

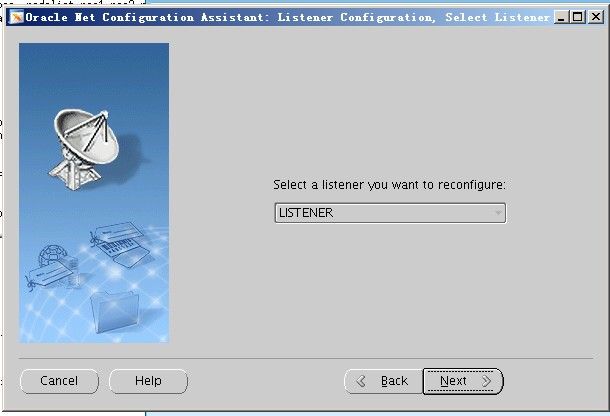

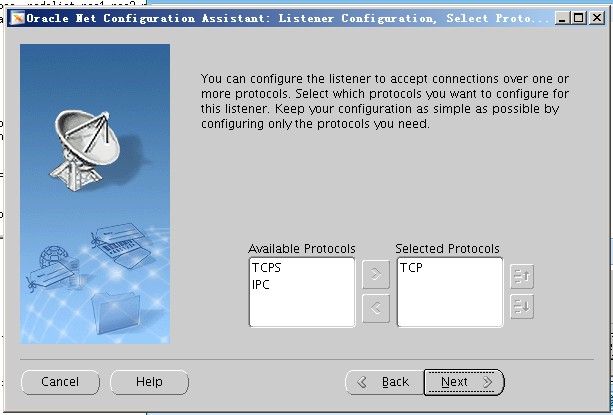

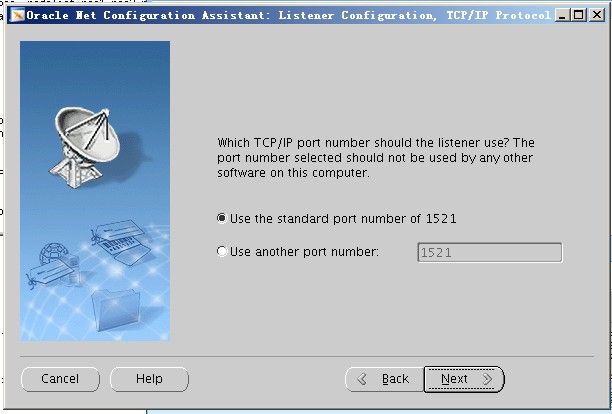

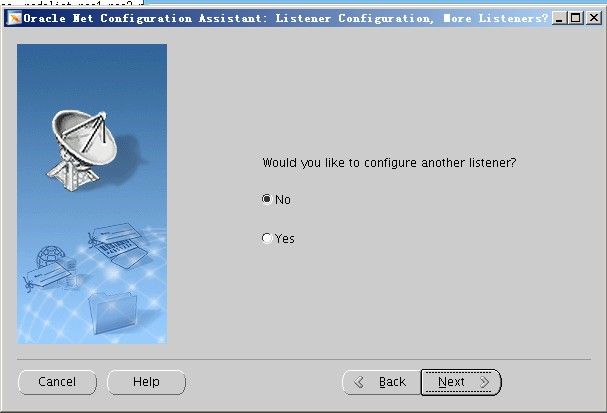

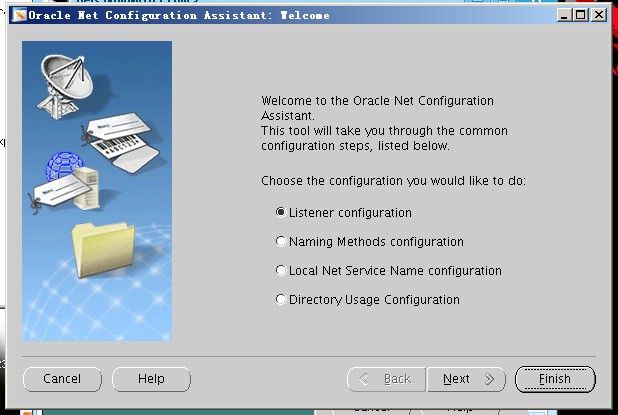

3、配置rac3的侦听

在rac3上用oracle用户运行netca:

四、在rac3上,instance的创建:

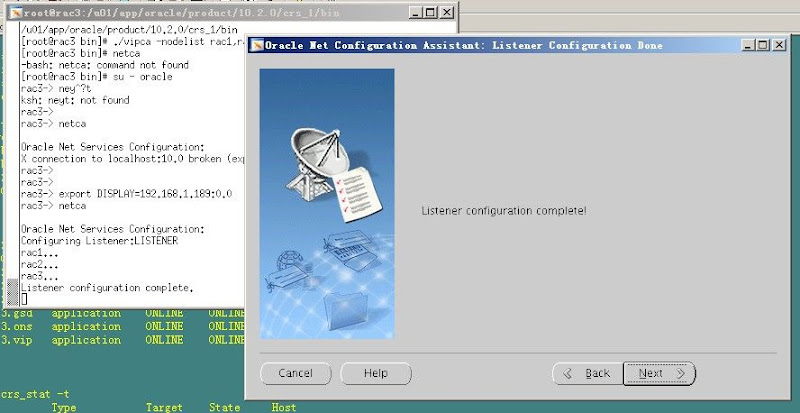

1、检查资源组状态,开始在rac3上建实例:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

rac2-> crs_stat -t Name Type Target State Host ------------------------------------------------------------ ora.devdb.db application ONLINE ONLINE rac2 ora....b1.inst application ONLINE ONLINE rac1 ora....b2.inst application ONLINE ONLINE rac2 ora....SM1.asm application ONLINE ONLINE rac1 ora....C1.lsnr application ONLINE ONLINE rac1 ora.rac1.gsd application ONLINE ONLINE rac1 ora.rac1.ons application ONLINE ONLINE rac1 ora.rac1.vip application ONLINE ONLINE rac1 ora....SM2.asm application ONLINE ONLINE rac2 ora....C2.lsnr application ONLINE ONLINE rac2 ora.rac2.gsd application ONLINE ONLINE rac2 ora.rac2.ons application ONLINE ONLINE rac2 ora.rac2.vip application ONLINE ONLINE rac2 ora....C3.lsnr application ONLINE ONLINE rac3 ora.rac3.gsd application ONLINE ONLINE rac3 ora.rac3.ons application ONLINE ONLINE rac3 ora.rac3.vip application ONLINE ONLINE rac3 rac2-> |

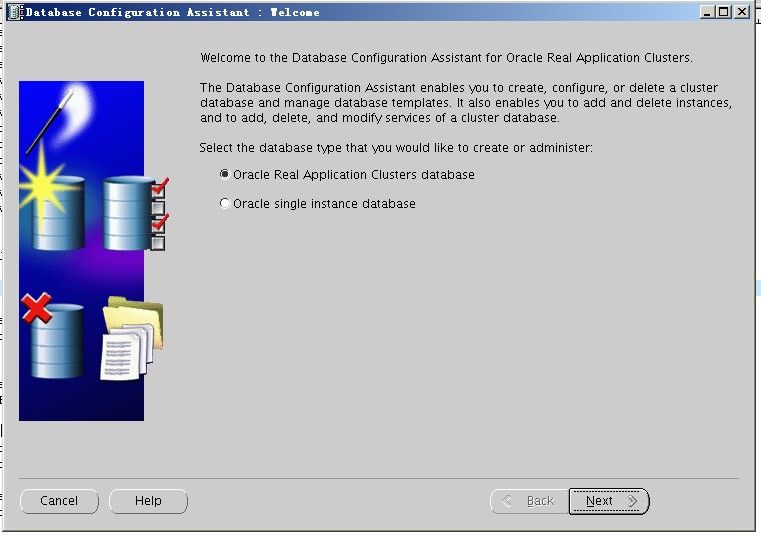

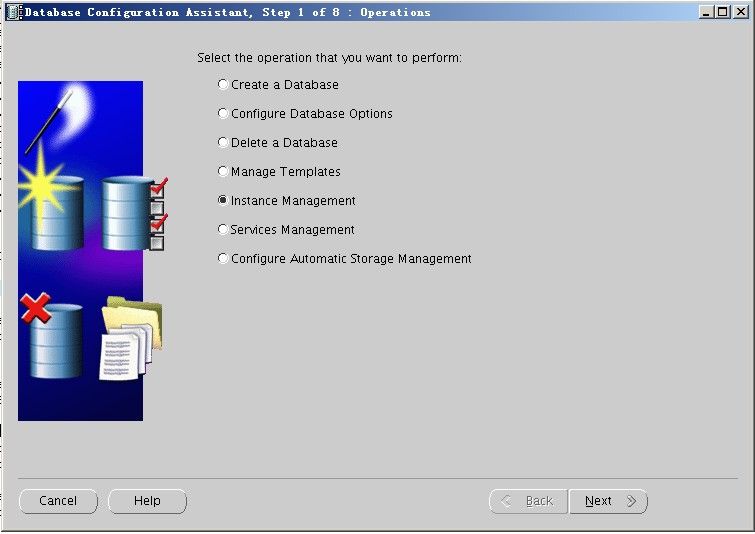

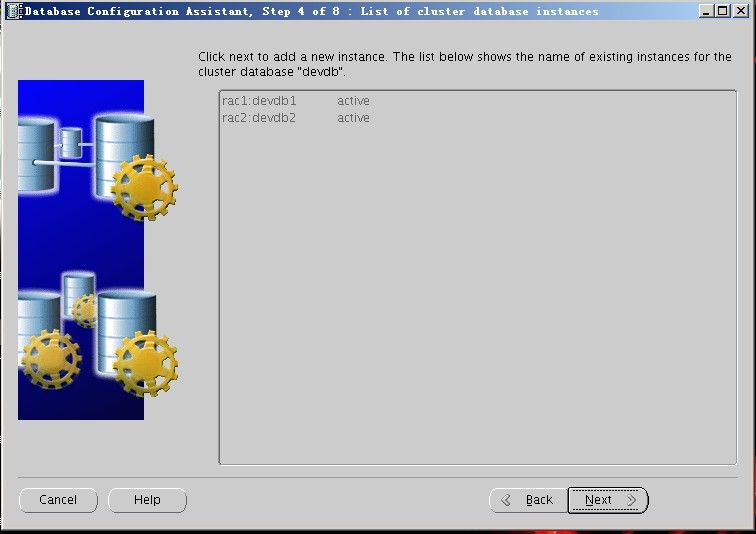

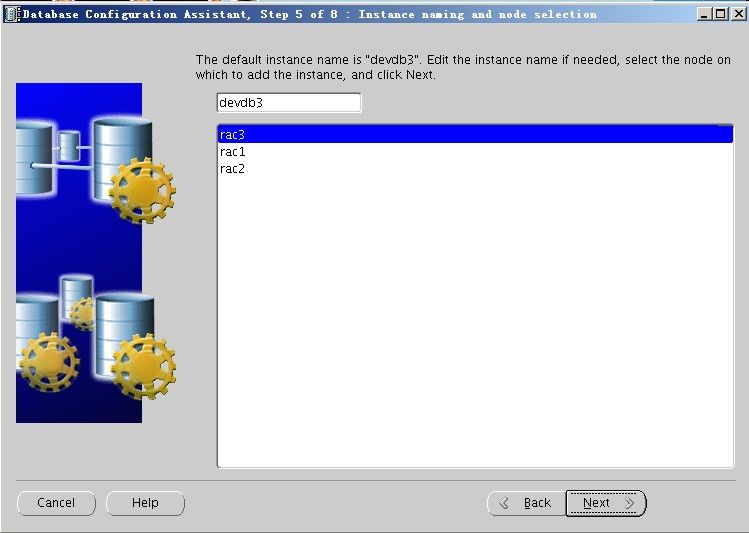

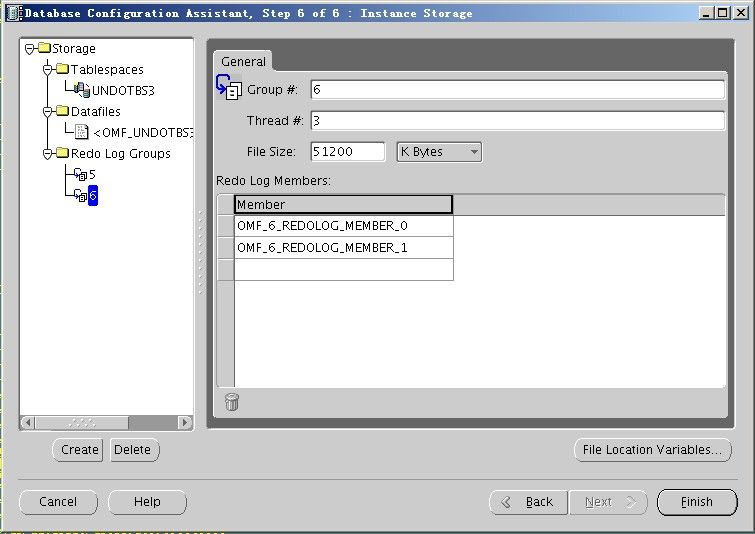

2、在rac1上运行dbca:

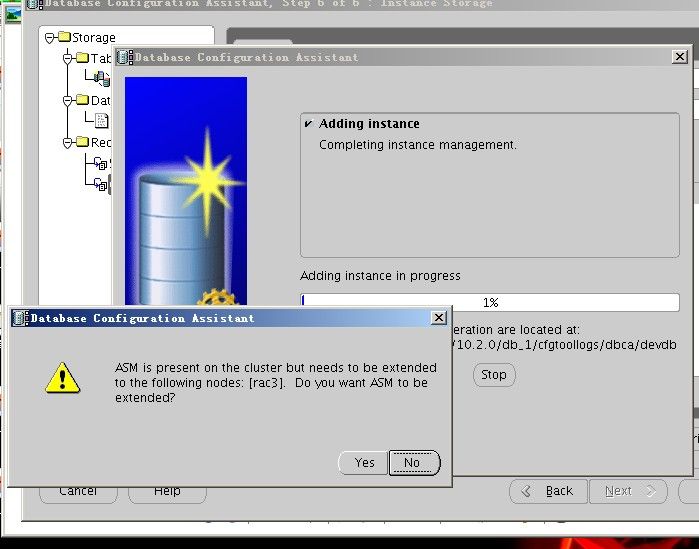

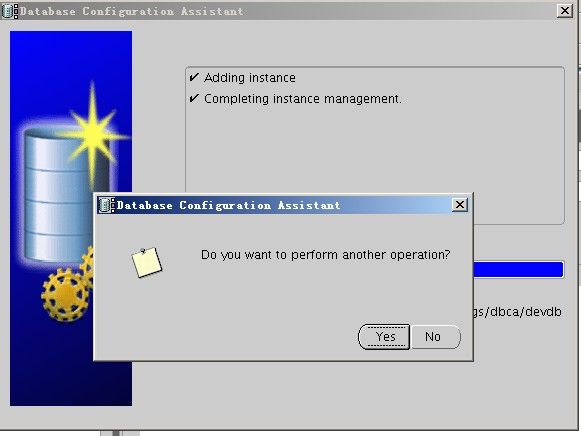

点YES

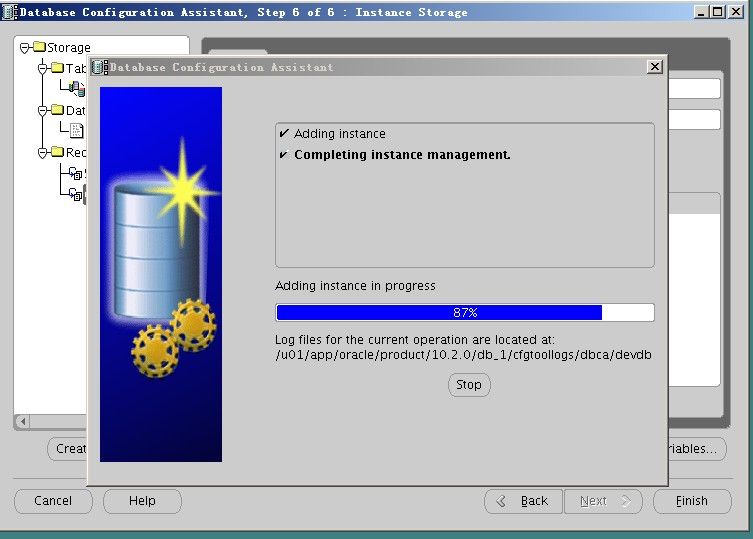

点NO,完成安装。

至此,已经全部安装完成,在crs_stat -t能看到各个资源已经online,且在数据库里面查询gv$的视图可以看到各个实例的情况。

3条评论

写得很不错的手记哟

越来越老手了,加油!!

老大给个qq啊 向你求教呢 qg_gp@sina.com ^_^